“Legislation is lagging behind” is something that we often hear when it comes to the field of artificial intelligence. This is because on the one hand, this field continues to advance rapidly, making all sorts of interesting applications possible. But on the other, not everything that’s technically possible should be done.

To balance the use of AI within its borders, the EU is currently working on its Artificial Intelligence Act (AIA) to bring more clarity to what can actually be done with AI, and what needs to be considered if AI is applied in a so-called high risk context.

While there are various perspectives to this new legislation, this blog focuses on exploring what needs to be fixed technically to make AI that is categorized as high-risk comply with the AIA. Even though it will take at least another 2 years after AIA’s implementation for the legislation to become mandatory, knowing how to do this is already relevant. This is because most of the AIA’s requirements can already help companies minimize the risk of ending up with an irresponsible implementation of AI.

What is the Artificial Intelligence Act (AIA) and what does it do?

In 2021, the European Commission proposed a new EU regulatory framework on artificial intelligence: the Artificial Intelligence Act (AIA). It is the first law on AI by a major regulator worldwide. In 2023, the EU approved a draft version of the AI Act, which means that it is now in the negotiation process towards becoming a law.

This regulation has the twin objective of promoting the uptake of AI on the one hand, and of addressing the risks associated with certain uses of such technology on the other. It aims to give people the confidence to embrace AI-based solutions while ensuring that the fundamental rights of European citizens are protected. The AIA is intended only to capture the commercial placing of AI products on the market; it excludes AI systems used solely for the purpose of scientific research.

What is AI according to the EU?

The AIA impacts applications of AI, specifically software products that influence the environments that they interact with. This spans a suite of software development frameworks that encompass:

- Machine learning

- Expert and logic systems

- Bayesian or statistical approaches

Therefore, almost any software decision support system operating within the EU qualifies as AI. This means that those system are not only limited to machine learning systems, but also expand to cover expert rule-based systems. Moreover, not only systems that are made in the EU itself are subject to the legislation, but also any provider or distributor of AI services that reach the EU market needs to be compliant (some exceptions that are exempted from this fall under military and international law enforcement and similar applications).

What are the categories of AI according to the AIA?

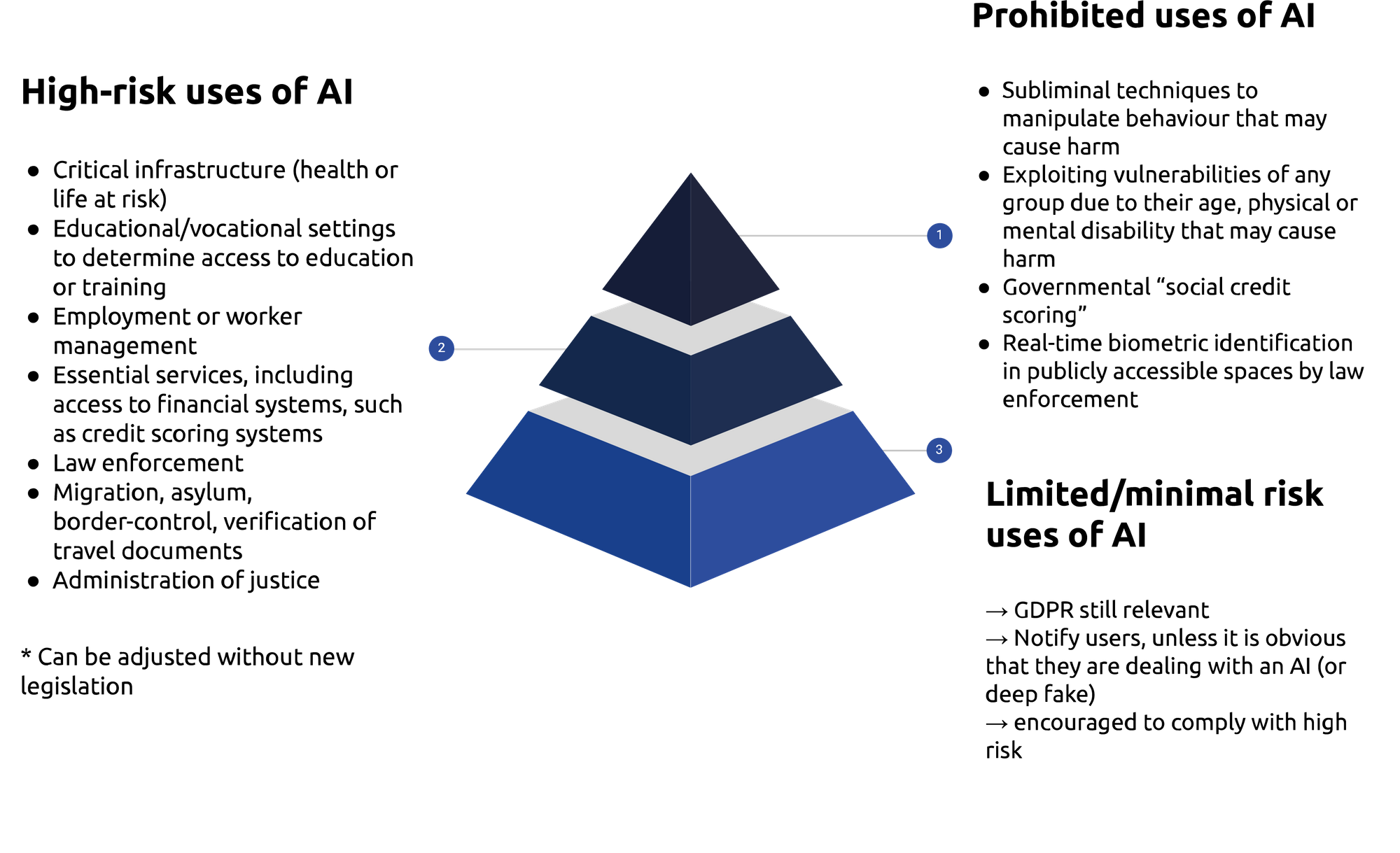

To unravel the AIA regulation, it is useful to get a better understanding of the risk-classification that it applies. In the AIA, every AI system can be classified in three groups based on the amount of risks it poses to the health and safety or fundamental rights of European citizens. The classification as high-risk does not only depend on the function performed by the AI system, but also on the specific purpose and modalities for which that system is used.

The AIA applies the following AI systems classification:

Prohibited AI

These AI applications should be abandoned because they cause too much risk for health, safety or fundamental rights of European citizens. Those include, for instance, AI applications that are used towards social scoring by public authorities or private entities.

High-risk AI

‘High-risk’ AI systems have to comply with a strict set of requirements to comply with the requirements for trustworthy AI. Those are AI applications that may adversely affect human health, safety or fundamental rights. They are gathered in a list of use cases (which can be amended by the EU Commission), containing, among others, use cases employing AI for purposes related to recruitment, creditworthiness, biometric identification, and access to education.

Mandatory requirements are imposed on this type of system, and are assessed to ensure they comply. In line with the AIA’s risk-based approach, high-risk AI systems are only permitted on the EU market if they have been subjected to (and successfully withstood) an ex-ante conformity assessment.

Low-risk AI

These are AI systems that have only limited obligations. This category contains use cases that focus on emotion recognition, biometric categorization, and others. Similar obligations to GDPR apply for this category: Users should be notified when they are interacting with such systems, and should be informed if their personal data is collected, for what purpose it is being collected, and whether they are classified into specific categories like gender, age, ethnic origin, or sexual orientation.

Achieve your organization's full potential with the leading AI consultancy in the Netherlands

How does the AIA promote AI innovation?

The goal of the EU’s AIA is to create a legal framework that is innovation-friendly, future-proof and resilient to disruption. Therefore, apart from all the requirements and obligations mentioned, the AIA contains measures that support innovation. For instance, it encourages national competent authorities to set up AI regulatory sandboxes, which establish a controlled environment to test innovative technologies for a limited time on the basis of a testing plan.

Even though The Netherlands is yet to gain experience in the domain of AI regulatory sandboxes, they have been tested abroad in countries such as Norway and the UK. This sandbox regulation is an appropriate complement to a strict liability approach, given the need to maintain a balance between a protective regulation on the one hand, and innovation in the rapidly developing AI field on the other*.

What are the necessary technical adjustments to implement if your AI is labeled as high-risk by the AIA?

We will proceed to answer this question using AI systems that perform credit scoring as an example. Credit scoring is a statistical analysis performed by a machine learning algorithm to predict someone’s creditworthiness. It is used, for instance, by lenders and financial institutions to determine the creditworthiness of a person or a small, owner-operated business.

The AIA clearly prohibits the practice of ‘social scoring’, in which an AI system assigns or increases and decreases individual scores based on certain behavior for surveillance purposes. This is because credit scoring comes with the risk of using information that is not relevant in determining the credit score, e.g. using ethnic background to determine if one could apply for a loan, increasing the chances of discrimination and bias.

For instance, a study conducted at UC Berkeley found that fintech algorithms are less biased than face-to-face lenders, but there are still some challenges. According to the study, even though fintech algorithms charge minority borrowers 40% less on average compared to what face-to-face lenders would charge, they still assign extra mortgage interest to borrowers from African or Latin American backgrounds.

However, the AIA classifies using financial information to predict creditworthiness as high-risk AI, allowing it only when it complies with the following requirements:

1) Creating and maintaining risk management systems:

It should be possible to identify, estimate and evaluate potential risks related to such systems, e.g. the risk of erroneous or biased AI-assisted decisions due to bias in the historical data.

For example, due to lower salaries being associated with certain zip codes, a model can offer lower limits to credit applications from certain zip codes, such as those where ethnic minorities tend to live. This may happen even though the original model did not have race or ethnicity as an input to check against [source: Harvard business review].

Therefore, removing bias from a credit decision requires more adjustment than simply removing data variables that clearly suggest gender or ethnicity. Biases in historical data should be identified and rectified to provide an artificial, but more accurate credit assessment. For example, a possibility to adjust for this latent bias is to apply different thresholds to different groups to balance out the effects in the historical data. Toolkits like IBM AI Fairness 360, or Fairlearn contain tools to detect and compensate for imbalances between groups and individuals.

2) Training, validating and testing datasets:

This is done to ensure that they remain relevant, representative, free of errors and complete regarding the intended purpose of the system. Manual interventions to detect and correct for unfairness this way might result in a more equitable credit system. General examples are scaling of input data, data transformations, data enrichments and enhancements, manual and implicit binning, sample balancing and creeping (new) data biases. Personal data may be processed, but only when subject to appropriate safeguards.

3) Guaranteeing the transparency and traceability of the AI system:

Provide all the necessary information to assess the compliance of the systems with the requirements set for a high-risk AI system.

To enable the exercise of important procedural fundamental rights, such as the right to an effective remedy, a fair trial, defense and the presumption of innocence, AI systems should be sufficiently transparent, explainable and documented. Therefore, complete and detailed documentation on the process, including system architecture, algorithmic design, model specifications, and the intended purpose of the AI system, should be created and maintained. For the traceability, this also means proper version control of models and (train/test) data should be in place.

4) Keeping human oversight within AI systems:

Human owners should be able to fully understand the capacities, limitations and output of the AI system to be able to detect and address irregularities as soon as possible. This brings in the importance of the automatic recording of events (‘logs’) while AI is operating to ensure traceability. Human users should be also able to intervene in an AI system by either disregarding its outcomes through a ‘stop button’, or by overriding or reversing its output.

5) Designing AI systems to achieve accuracy, robustness and cybersecurity:

High-risk AI systems should be designed and developed to achieve appropriate levels of accuracy, robustness and cybersecurity. The level of accuracy and metrics used to determine accuracy should be communicated to the users of high-risk AI systems. Moreover, the AI system should be resilient in regards to the errors, faults or inconsistencies that may occur, performing consistently throughout its lifecycle.

In the case of credit scoring, an algorithm could be regularized by including an extra parameter that penalizes the model if it starts treating subclasses differently, which can contribute to its robustness. Also, mitigation measures for potential feedback loops should be put in place.

Join the leading data & AI consultancy in the Netherlands. Click here to view our vacancies

Conclusion

Nowadays, lack of attention to data and AI ethics exposes companies to reputational, regulatory and legal risks. Therefore, the biggest tech companies have put together fast-growing teams to tackle the ethical problems that arise from AI.

To create future-proof AI systems, the AIA should be studied closely, as it impacts use of AI systems significantly. These systems carry potential as well as risks, e.g. discrimination and exploiting vulnerabilities, but the AIA enforces good practices when it comes to such risks.

Fortunately, with this new act in place, creating trustworthy AI is not just a socially responsible pursuit, but also the legislation clarifies what Responsible AI is, and creates a level playing field by enforcing these standards across the EU.

Early movers could have a real competitive advantage on top of doing their moral duty.

* Truby, J., Brown, R., Ibrahim, I., & Parellada, O. (2021). A Sandbox Approach to Regulating High-Risk Artificial Intelligence Applications. European Journal of Risk Regulation, 1-29. doi:10.1017/err.2021.52