Artificial intelligence and music might not be two words you would put in the same sentence. However, machine learning models can be trained to produce novel music. In this example business analytics student Sinit Tafla used artificial intelligence to compose music based on classical piano pieces from Johan Sebastian Bach.

How does AI music composition work?

In order to create music through artificial intelligence, you first need to collect songs and convert them into a usable format. The importance of this step should not be underestimated. In the same way that a student needs good textbooks to learn for an exam, models need well-prepared data to learn relationships in songs.

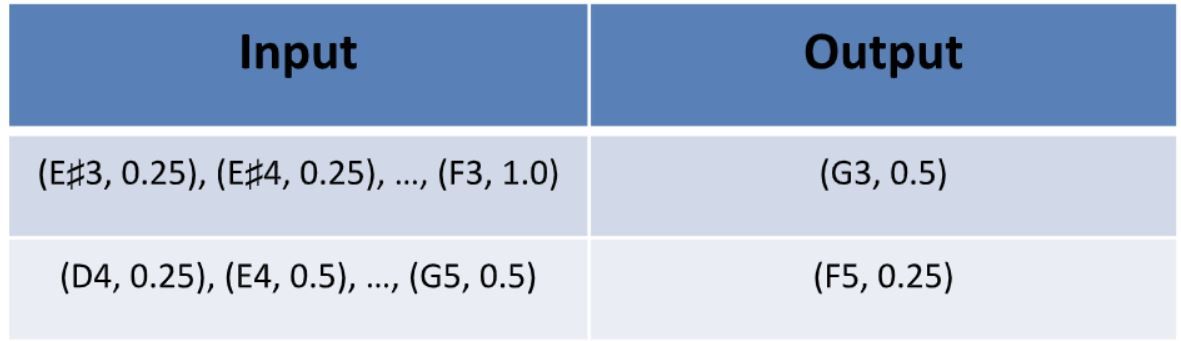

To achieve this, 200 piano pieces created by Johan Sebastian Bach, a composer from the 17th and 18th century, were collected using the Musical Instrument Digital Interface protocol (MIDI). The advantage of MIDI files is that they do not store any digital audio. Instead, they contain a list of instructions, which a device (for example, a computer or cell-phone) can translate into music. Each musical piece is rewritten to a sequence of time points, consisting of two features: a note and a time interval.

The note features are used to analyze different melodies, whereas the time intervals allow you to examine the different paces and rhythms. In the example above, a computer would play note ‘A4’ first, and then wait 0.5 seconds to play note ‘A3’. Following this, 0.5 seconds would pass before the computer plays ‘F4’, etc.

Training the AI music generator

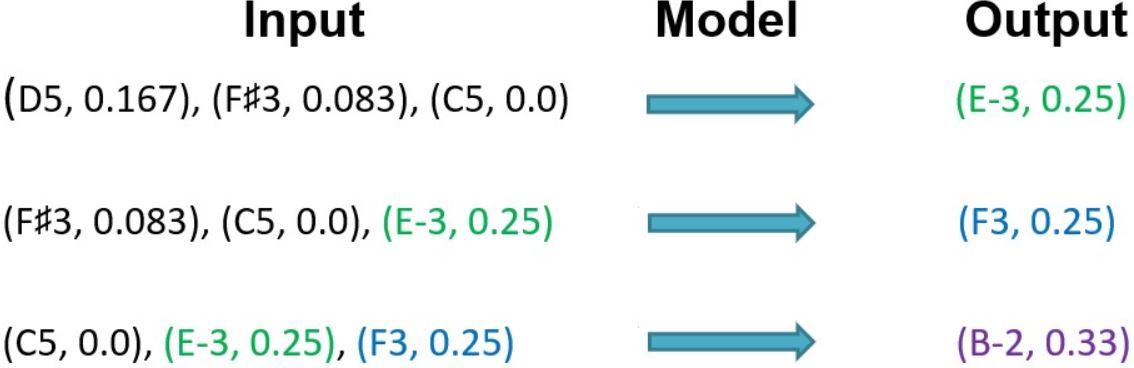

Next, the input and outputs need to be defined to let the generator know what it should train. The models take a set of historical timepoints as input. After processing the input and extracting relevant information, they estimate the next note and time interval.

Make an artificial intelligence music composition!

You can judge a model’s performance by the number of mistakes it makes when predicting the next timestep. We state that estimating the next note is equally important as predicting the next time interval. It’s possible to change the weights to make one of the two features more important, based on found insights, preferences or intuition.

If you’re satisfied with the performance of your model, it’s time to make some music! You start with an existing sequence of timesteps from your dataset. Feed the initial input to your model to estimate the next note and time interval, and save these results. In the next round, the input shifts one timestep, (i.e., the second timestep becomes the first timestep, the third becomes the second, etc.). Finally, the output of the first round is added to the current input. Next, the input is fed to the model to produce a new prediction, which is saved as well. This process repeats until there are enough new timesteps. In the image below, a new musical piece would be composed with the following time points: (E3, 0.25), (F3, 0.25), (B2, 0.33). Lastly, the new time steps are converted to a MIDI file, containing novel music. And ta-da, you have your own AI generated music.

AI music compositions

If you’re interested in creating your own artificial intelligence music compositions, you can listen to one of the results from the artificial intelligence musician used in this study.

If you were listening to it without knowing it had been created by an artificial intelligence musician, you probably wouldn’t be able to tell the difference. In several years, we could have film scores, or classical music, or pop songs created by artificial intelligence. This would change the world of music as we know it.