Statistics, computers, and math. By now you might be utterly bored and think about clicking away. These are not the hottest topics to discuss during Friday drinks. At least, not in most organisations. At the Gemeente Nijmegen, it isn’t. At Xomnia these are. Yet these topics are some of the foundations of the data science techniques. A yearlong I combined understanding and thinking about the newest data science techniques while simultaneously trying to explain them to my colleagues at the Gemeente Nijmegen.

Critical attitude is a blessing

At the Gemeente Nijmegen, it is not enough to present an algorithm and saying it works well. The Gemeente Nijmegen needs to take decisions that are based on facts and that are guided by law. Applying data science magic thus becomes a lot more difficult if you have to explain to others why it works and when it does not. This critical attitude is the best blessing a company can give to a fresh data scientist.

Predicting air quality measurements

One of my projects was about predicting air quality measurements. Every citizen could apply for a temporary low-cost air-quality measurement device. The devices produce readings about meteo and gas components in the outdoor air. However, these readings are noisy, biased, and on a wrong scale (i.e. in kOhm). No one knows how to interpret these values. I used a modern machine learning technique that predicts values that can be interpreted. “Job done!” you might think. But then comes in the citizen scientist who wants to know what the model predicts, how it did it and if the results can be trusted and to what extent. Behold, a new challenge on a whole different level.

Understanding and explaining your model

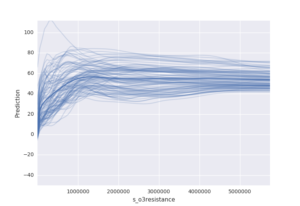

Given any type of model, there is one thing that always works as a first step to understanding and explaining your model: treating your solution as a black box! What?! This might sound counter intuitive. Indeed, understanding what happens inside the box, in theory, and in practice, is mandatory for responsible algorithms. But, a first step into understanding the model is seeing what it predicts and when. For example, how does the ozone prediction changes when the raw ozone measurement changes? These kinds of input/output relations can be visualised easily without knowing what is inside the box. These visualisations lead to interpretable conclusions such as “When the electrical resistance for ozone increases the prediction for interpretable ozone values also increases.” Then you are half way in understanding what you model predicts.

Keep asking questions

New and old data science techniques have a lot to offer. They can help to automate and improve repetitive tasks. The key to applying these techniques is always to understand what is happening. You can never ask enough questions about a data science technique that magically generates numbers. Keep asking questions at least until the numbers are no longer magic to you. Thanks to all my colleagues at the Gemeente Nijmegen and Xomnia who did this! Pieter Marsman